eBPF学习实践系列(二) -- bcc tools网络工具集

bcc tools工具集中网络部分说明和使用。

1. 背景

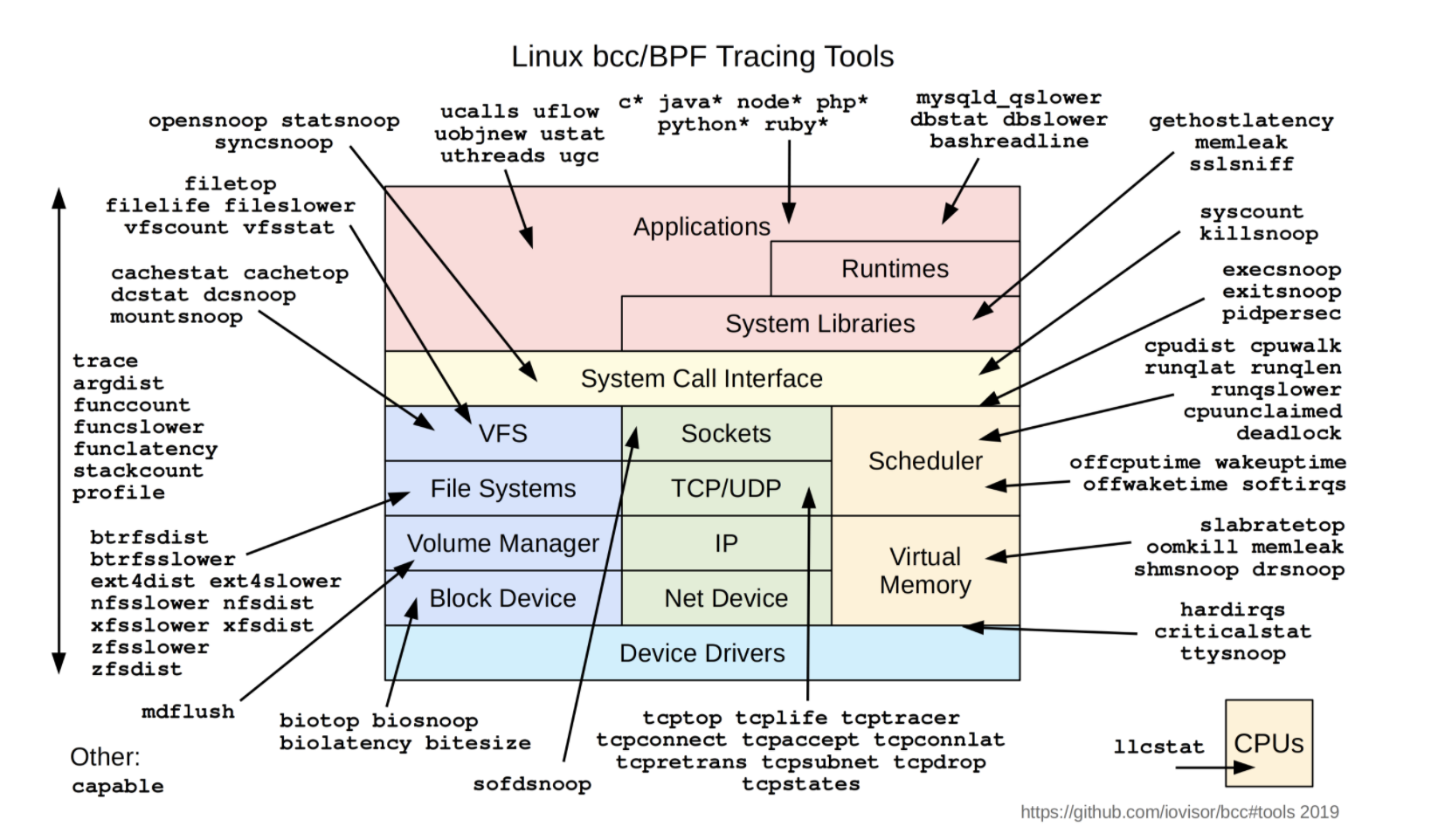

上篇(eBPF学习实践系列(一) – 初识eBPF)中提到性能分析大师Brendan Gregg等编写了诸多的 BCC 或 BPFTrace 的工具集可以拿来直接使用,可以满足很多我们日常问题分析和排查,本篇先学习下网络相关的几个工具。

说明:本博客作为个人学习实践笔记,可供参考但非系统教程,可能存在错误或遗漏,欢迎指正。若需系统学习,建议参考原链接。

2. Linux性能分析60s

各种性能工具是为了能应用到实际中提升定位问题的效率,而工具已经有这么多了,我们该选用哪些呢?对于Linux下初步的性能问题定位,先说下大佬们总结的最佳实践。

2.1. 60s系列Linux命令版本

60s内,用下述的10个命令全面了解系统资源的使用情况

1

2

3

4

5

6

7

8

9

10

uptime

dmesg | tail

vmstat 1

mpstat -P ALL 1

pidstat 1

iostat -xz 1

free -m

sar -n DEV 1

sar -n TCP,ETCP 1

top

具体可参考:Linux Performance Analysis in 60,000 Milliseconds

uptime快速查看平均负载dmesg | tail查看最近10个系统日志,是否有可能导致性能问题的错误(如OOM、TCP丢包)- 不要忽略这个步骤,

dmesg总是值得检查的!

- 不要忽略这个步骤,

vmstat 1系统状态(CPU使用率,内存使用,虚拟内存交换情况,IO读写情况等)mpstat -P ALL 1显示每个CPU的占用情况pidstat 1进程的CPU占用率iostat -xz 1磁盘IO情况free -m系统内存使用情况sar -n DEV 1网络设备吞吐率sar -n TCP,ETCP 1查看TCP连接状态top相对全面的各系统负载情况

2.2. 60s系列BPF版本

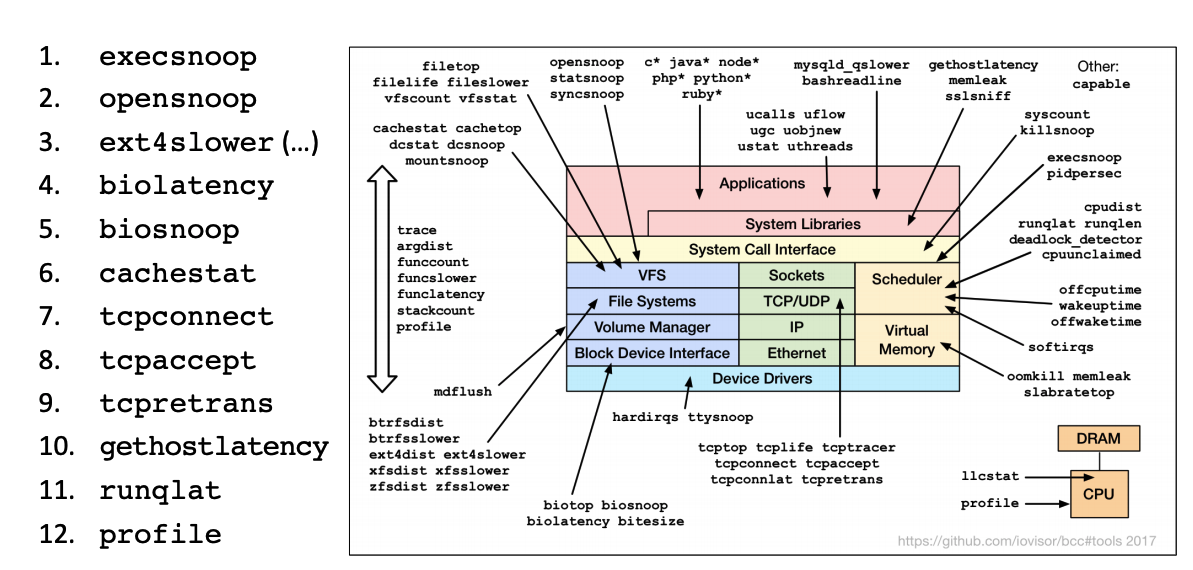

参考,这里的bcc tools是2017年版本,上面是2019年版本

3. bcc tools网络相关工具集

通过开头的bcc工具集示意图,网络相关工具如下:

sofdsnooptcptoptcplifetcptracertcpconnecttcpaccepttcpconnlattcpretranstcpsubnettcpdroptcpstates- 此外还有

tcprtt、tcpsynbl、solisten、netqtop等,以及中断统计的softirqs

下面看一下各自的功能和基本用法。

可以通过-h 或者 man查看说明,如 ./tcptop -h、man bcc-tcptop/man tcptop。

3.1. sofdsnoop:跟踪通过socket传递的文件描述符

1

2

3

4

5

6

7

8

9

10

11

12

[root@anonymous ➜ /usr/share/bcc/tools ]$ ./sofdsnoop -h

usage: sofdsnoop [-h] [-T] [-p PID] [-t TID] [-n NAME] [-d DURATION]

Trace file descriptors passed via socket

examples:

./sofdsnoop # trace passed file descriptors

./sofdsnoop -T # include timestamps

./sofdsnoop -p 181 # only trace PID 181

./sofdsnoop -t 123 # only trace TID 123

./sofdsnoop -d 10 # trace for 10 seconds only

./sofdsnoop -n main # only print process names containing "main"

执行结果示例如下:通过ssh连接192.168.1.150,并退出ssh登录

1

2

3

4

5

6

[root@anonymous ➜ /usr/share/bcc/tools ]$ ./sofdsnoop

ACTION TID COMM SOCKET FD NAME

SEND 1199 systemd-logind 12:socket:[20935] 25 N/A

SEND 1122 dbus-daemon 6:N/A 21 N/A

SEND 6701 sshd 12:socket:[65823] 10 /dev/ptmx

SEND 6701 sshd 12:socket:[65823] 13 N/A

3.1.1. 释疑:“通过socket传递的文件描述符”是什么意思

实验现象和疑问:

python -m http.server起一个8000端口服务,另一台通过curl ip:8000的请求,sofdsnoop没有追踪到记录,为什么?网络交互不是会打开文件描述符吗

疑问:sofdsnoop到底追踪的是什么,man里面没有过多解释,网上搜索也没大找到明确解答,看代码逻辑也没太看清楚。 (后续:问GPT理解后,回头看还是应该先理解清楚通过socket传递的文件描述符是指什么)

ASK:

- Q1:你了解下这个热门项目 https://github.com/iovisor/bcc,帮我解释下tools/sofdsnoop

- Q2:详细说明下sofdsnoop里内核侧的代码逻辑

- Q3:python -m http.server起一个8000端口服务,另一台机器curl发起一个socket连接请求,sofdsnoop能追踪到吗

- Q4:文件描述符的传递 没搞懂,是什么场景

GPT Q3解答:

1

2

3

4

5

6

7

8

9

`python -m http.server` 命令用于在本地启动一个简单的 HTTP 服务器,默认监听在 8000 端口(如果 8000 端口没有被其他服务占用的话)。这个服务器是基于 Python 的 `http.server` 模块实现的,它会响应 HTTP 请求,而不是原始的 socket 连接。

`sofdsnoop` 工具是用于追踪通过 UNIX 域套接字或 TCP/UDP 套接字传递文件描述符的情况。但是,`http.server` 启动的 HTTP 服务器并不直接涉及文件描述符的传递(除非在 HTTP 请求的某些特定部分中,但通常这不是 HTTP 协议的一部分)。

当你使用 `curl` 从另一台机器发起一个 HTTP 请求到 `python -m http.server` 启动的服务器时,你实际上是在建立一个 TCP 连接,并通过这个连接发送 HTTP 请求和接收 HTTP 响应。但是,这个过程中并没有文件描述符的传递(至少从 HTTP 协议的角度看是这样)。

因此,**sofdsnoop 无法追踪到通过 `curl` 发起的 HTTP 请求**,因为这些请求并不涉及文件描述符的传递。`sofdsnoop` 主要用于追踪那些通过 socket 传递文件描述符的情况,比如使用 UNIX 域套接字或 TCP/UDP 套接字发送 SCM_RIGHTS 消息。

如果你想要追踪 TCP/UDP 连接本身(而不是文件描述符的传递),你可能需要使用其他工具,如 `tcpdump`、`ss`、`netstat` 或 `lsof` 等。这些工具可以帮助你查看和分析网络连接和套接字状态。

GPT Q4解答后,基本就明白了:

- 文件描述符的传递是在操作系统中一种重要的进程间通信(IPC)机制,特别是在UNIX和类UNIX系统中。

- 文件描述符的传递是指一个进程将其打开的文件、套接字或管道的文件描述符发送给另一个进程,使得接收进程可以直接使用该文件描述符来访问对应的资源,而无需重新打开或建立连接。

- 场景

- 进程间通信:通过传递文件描述符,两个进程可以共享对同一个文件、套接字或管道的访问权限,从而实现进程间的数据交换和同步。

- 网络服务:在服务器和客户端之间的通信中,服务器可以将已建立的套接字连接的文件描述符传递给子进程,由子进程负责处理具体的请求和响应,从而提高并发性能。

- 文件共享:通过传递文件描述符,多个进程可以共同访问和修改同一个文件,实现文件共享和协作编辑等功能。

- 实现方式

- UNIX域套接字:UNIX域套接字是一种在同一台机器上的进程间通信机制。通过UNIX域套接字,一个进程可以将其打开的文件、套接字或管道的文件描述符发送给另一个进程。接收进程在接收到文件描述符后,可以直接使用该文件描述符来访问对应的资源。

- SCM_RIGHTS消息:在UNIX和类UNIX系统中,有一种特殊的消息类型称为SCM_RIGHTS消息,它允许进程在发送消息时附带一个或多个文件描述符。接收进程在接收到SCM_RIGHTS消息后,可以从消息中提取出文件描述符,并使用它们来访问对应的资源。

- 注意事项:文件描述符的传递通常只适用于同一台机器上的进程间通信。如果需要在不同机器上的进程间传递文件描述符,需要使用其他机制,如网络套接字或共享内存等。

结合实验观察到的ssh登录时的追踪记录,对应的应该是服务端收到请求后,通过进程间通信传递12这个socket进行处理。

一上来就来了个王炸。。

3.2. tcptop:统计TCP发送/接收的吞吐量

1

2

3

4

5

6

7

8

9

10

11

12

13

14

# man bcc-tcptop

NAME

tcptop - Summarize TCP send/recv throughput by host. Top for TCP.

SYNOPSIS

tcptop [-h] [-C] [-S] [-p PID] [--cgroupmap MAPPATH]

[--mntnsmap MAPPATH] [interval] [count]

DESCRIPTION

This is top for TCP sessions.

This summarizes TCP send/receive Kbytes by host, and prints a summary that refreshes, along other system-wide metrics.

This uses dynamic tracing of kernel TCP send/receive functions, and will need to be updated to match kernel changes.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

[root@anonymous ➜ /usr/share/bcc/tools ]$ ./tcptop -h

usage: tcptop [-h] [-C] [-S] [-p PID] [--cgroupmap CGROUPMAP]

[--mntnsmap MNTNSMAP]

[interval] [count]

Summarize TCP send/recv throughput by host

positional arguments:

interval output interval, in seconds (default 1)

count number of outputs

examples:

./tcptop # trace TCP send/recv by host

./tcptop -C # don't clear the screen

./tcptop -p 181 # only trace PID 181

./tcptop --cgroupmap mappath # only trace cgroups in this BPF map

./tcptop --mntnsmap mappath # only trace mount namespaces in the map

示例:python -m http.server起8000端口服务,(本机)curl ip:8000请求

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

[root@anonymous ➜ /usr/share/bcc/tools ]$ ./tcptop -C 5

Tracing... Output every 5 secs. Hit Ctrl-C to end

14:11:52 loadavg: 0.13 0.05 0.01 2/319 7868

PID COMM LADDR RADDR RX_KB TX_KB

4891 sshd 192.168.1.150:22 192.168.1.2:61572 0 0

14:11:57 loadavg: 0.12 0.04 0.01 2/319 7873

PID COMM LADDR RADDR RX_KB TX_KB

6704 sshd 192.168.1.150:22 192.168.1.2:62262 0 2

7869 7869 192.168.1.150:55890 192.168.1.150:8000 2 0

5110 python 192.168.1.150:8000 192.168.1.150:55890 0 2

4891 sshd 192.168.1.150:22 192.168.1.2:61572 0 0

6393 sshd 192.168.1.150:22 192.168.1.2:61936 0 0

5059 sshd 192.168.1.150:22 192.168.1.2:61727 0 0

3.3. tcplife:TCP会话跟踪

对网络负载的特征和流量计算很有帮助,可以识别当前有哪些连接、连接上有多少数据传输

追踪tracepoint:sock:inet_sock_set_state(4.16起);之前内核版本则利用其他动态追踪方式

1

2

3

4

5

6

7

8

# man bcc-tcplife

DESCRIPTION

This tool traces TCP sessions that open and close while tracing, and prints a line of output to summarize each one. This includes the IP addresses,

ports, duration, and throughput for the session. This is useful for workload characterisation and flow accounting: identifying what connections are

happening, with the bytes transferred.

This tool works using the sock:inet_sock_set_state tracepoint if it exists, added to Linux 4.16, and switches to using kernel dynamic tracing for

older kernels. Only TCP state changes are traced, so it is expected that the overhead of this tool is much lower than typical send/receive tracing.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

[root@anonymous ➜ /usr/share/bcc/tools ]$ ./tcplife -h

usage: tcplife [-h] [-T] [-t] [-w] [-s] [-p PID] [-L LOCALPORT]

[-D REMOTEPORT]

Trace the lifespan of TCP sessions and summarize

examples:

./tcplife # trace all TCP connect()s

./tcplife -T # include time column (HH:MM:SS)

./tcplife -w # wider columns (fit IPv6)

./tcplife -stT # csv output, with times & timestamps

./tcplife -p 181 # only trace PID 181

./tcplife -L 80 # only trace local port 80

./tcplife -L 80,81 # only trace local ports 80 and 81

./tcplife -D 80 # only trace remote port 80

示例:python -m http.server起8000端口服务,(本机)curl ip:8000请求

1

2

3

4

[root@anonymous ➜ /usr/share/bcc/tools ]$ ./tcplife -T

TIME PID COMM LADDR LPORT RADDR RPORT TX_KB RX_KB MS

14:33:27 8981 curl 192.168.1.150 8000 192.168.1.150 56164 2 0 0.68

14:33:27 8981 curl 192.168.1.150 56164 192.168.1.150 8000 0 2 0.70

另外试了下-L 8000和-D 8000,单独都能抓到,-L 8000 -D 8000是&&的关系

3.4. tcptracer:已建立的TCP连接跟踪

追踪已建立连接的TCP socket,每个connect/accept/close事件都会作为一条记录打印。只会追踪已建立连接的socket。

1

2

3

4

5

6

7

8

9

10

11

NAME

tcptracer - Trace TCP established connections. Uses Linux eBPF/bcc.

DESCRIPTION

This tool traces established TCP connections that open and close while tracing, and prints a line of

output per connect, accept and close events. This includes the type of event, PID, IP addresses and

ports.

This tool works by using kernel dynamic tracing, and will need to be updated if the kernel implementa-

tion changes. Only established TCP connections are traced, so it is expected that the overhead of this

tool is rather low.

1

2

3

4

5

6

7

8

9

usage: tcptracer [-h] [-t] [-p PID] [-N NETNS] [--cgroupmap CGROUPMAP]

[--mntnsmap MNTNSMAP] [-v]

examples:(从man中汇总)

./tcptracer # Trace all TCP established connections

./tcptracer -v # Trace all TCP established connections with verbose lines

./tcptracer -p 181 # Trace PID 181 only

./tcptracer -N 4026531969 # Trace connections in network namespace 4026531969 only

./tcptracer --cgroupmap /sys/fs/bpf/test01 # Trace a set of cgroups only

示例:python -m http.server起8000端口服务,(本机)curl ip:8000请求

1

2

3

4

5

6

7

8

9

10

11

12

13

14

[root@anonymous ➜ /usr/share/bcc/tools ]$ ./tcptracer

Tracing TCP established connections. Ctrl-C to end.

T PID COMM IP SADDR DADDR SPORT DPORT

C 9102 curl 4 192.168.1.150 192.168.1.150 56260 8000

A 5110 python 4 192.168.1.150 192.168.1.150 8000 56260

X 5110 python 4 192.168.1.150 192.168.1.150 8000 56260

X 9102 curl 4 192.168.1.150 192.168.1.150 56260 8000

# 5110是8000端口服务的pid

[root@anonymous ➜ /usr/share/bcc/tools ]$ ./tcptracer -t -p 5110

Tracing TCP established connections. Ctrl-C to end.

TIME(s) T PID COMM IP SADDR DADDR SPORT DPORT

0.000 A 5110 python 4 192.168.1.150 192.168.1.150 8000 56270

0.001 X 5110 python 4 192.168.1.150 192.168.1.150 8000 56270

关于事件类型的解释:

C表示连接(Connect):这通常表示一个 TCP 连接请求已经发送或接收。A表示接受(Accept):这通常表示服务器已经接受了一个来自客户端的连接请求,并创建了一个新的连接X表示关闭(Close):这表示 TCP 连接已经关闭,可能是由于正常关闭(如通过 FIN/ACK 握手)或由于某种错误导致的异常关闭。

可以用-v展示事件的名称(-v Print full lines, with long event type names and network namespace numbers.)

1

2

3

4

5

6

7

[root@anonymous ➜ /usr/share/bcc/tools ]$ ./tcptracer -v

Tracing TCP established connections. Ctrl-C to end.

TYPE PID COMM IP SADDR DADDR SPORT DPORT NETNS

connect 9418 curl 4 192.168.1.150 192.168.1.150 56426 8000 4026531992

accept 5110 python 4 192.168.1.150 192.168.1.150 8000 56426 4026531992

close 5110 python 4 192.168.1.150 192.168.1.150 8000 56426 4026531992

close 9418 curl 4 192.168.1.150 192.168.1.150 56426 8000 4026531992

3.5. tcpconnect:主动的TCP连接跟踪

跟踪主动发起(通过connect())连接的TCP,所有尝试connect的连接都会跟踪,即使是最终失败的。

注意:accept()是被动连接,不在此追踪范围(可通过tcpaccept追踪)。

追踪的函数是:tcp_v4_connect() 和 tcp_v6_connect()

1

2

3

4

5

6

7

8

9

10

11

12

13

14

NAME

tcpconnect - Trace TCP active connections (connect()). Uses Linux eBPF/bcc.

SYNOPSIS

tcpconnect [-h] [-c] [-t] [-p PID] [-P PORT] [-L] [-u UID] [-U] [--cgroupmap MAPPATH] [--mntnsmap MAPPATH] [-d]

DESCRIPTION

This tool traces active TCP connections (eg, via a connect() syscall; accept() are passive connections). This can be useful for general trou-

bleshooting to see what connections are initiated by the local server.

All connection attempts are traced, even if they ultimately fail.

This works by tracing the kernel tcp_v4_connect() and tcp_v6_connect() functions using dynamic tracing, and will need updating to match any changes

to these functions.

一些用法(-h):

1

2

3

4

5

6

7

8

9

10

11

12

13

14

examples:

./tcpconnect # trace all TCP connect()s

./tcpconnect -t # include timestamps

./tcpconnect -d # include DNS queries associated with connects

./tcpconnect -p 181 # only trace PID 181

./tcpconnect -P 80 # only trace port 80

# 可以同时跟踪几个端口

./tcpconnect -P 80,81 # only trace port 80 and 81

./tcpconnect -U # include UID

./tcpconnect -u 1000 # only trace UID 1000

./tcpconnect -c # count connects per src ip and dest ip/port

./tcpconnect -L # include LPORT while printing outputs

./tcpconnect --cgroupmap mappath # only trace cgroups in this BPF map

./tcpconnect --mntnsmap mappath # only trace mount namespaces in the map

示例:python -m http.server起8000端口服务,(本机)curl ip:8000请求;

并发起一个不存在端口的请求,curl ip:12345,可以看到都追踪到了

1

2

3

4

5

[root@anonymous ➜ /usr/share/bcc/tools ]$ ./tcpconnect

Tracing connect ... Hit Ctrl-C to end

PID COMM IP SADDR DADDR DPORT

10189 curl 4 192.168.1.150 192.168.1.150 12345

10194 curl 4 192.168.1.150 192.168.1.150 8000

另外一个案例待定位(TODO下面小节里已定位):主机1(MAC电脑,192.168.1.2) ssh到 主机2(Linux PC机,即上面的192.168.1.150),python -m http.server起服务都是在Linux操作的,但是从MAC上curl 192.168.1.150:8000不通。

- MAC上ping 192.168.1.150 正常

- curl时,能抓到客户端发送了

SYN,同时服务端能收到SYN,不过没后续应答了 Linux机器上混淆了,服务端上tcpconnect没抓到 connect 请求(有SYN为什么不算connect?)tcpconnect就是抓不到主动connect(上面抓到只是因为在同一台机器发起的连接)- Linux机器上,自己curl(

curl 192.168.1.150:8000)时http应答都是正常的

3.6. tcpaccept:被动的TCP连接跟踪

追踪被动连接,且只追踪成功的TCP accept

追踪的函数是:inet_csk_accept()(注册在tcp_prot.accept中)

1

2

3

4

5

6

7

8

9

10

11

NAME

tcpaccept - Trace TCP passive connections (accept()). Uses Linux eBPF/bcc.

DESCRIPTION

This tool traces passive TCP connections (eg, via an accept() syscall; connect() are active connections). This can be useful for general trou-

bleshooting to see what new connections the local server is accepting.

This uses dynamic tracing of the kernel inet_csk_accept() socket function (from tcp_prot.accept), and will need to be modified to match kernel

changes.

This tool only traces successful TCP accept()s. Connection attempts to closed ports will not be shown (those can be traced via other functions).

1

2

3

4

5

6

7

examples:

./tcpaccept # trace all TCP accept()s

./tcpaccept -t # include timestamps

./tcpaccept -P 80,81 # only trace port 80 and 81

./tcpaccept -p 181 # only trace PID 181

./tcpaccept --cgroupmap mappath # only trace cgroups in this BPF map

./tcpaccept --mntnsmap mappath # only trace mount namespaces in the map

示例:linux机器(192.168.1.150)上python -m http.server起8000端口服务。ssh到linux机器,并curl 192.168.1.150:8000

1

2

3

4

[root@anonymous ➜ /usr/share/bcc/tools ]$ ./tcpaccept

PID COMM IP RADDR RPORT LADDR LPORT

1227 sshd 4 192.168.1.2 63276 192.168.1.150 22

5110 python 4 192.168.1.150 57004 192.168.1.150 8000

3.7. tcpconnlat:跟踪TCP主动连接的延迟

TCP连接延迟是建立连接所需的时间:从发出SYN到向对端发应答包(即三次握手时收到SYN+ACK后的ACK应答)的时间。

针对的是内核TCP/IP处理和网络往返时间,而不是应用程序运行时。

所有连接尝试都会追踪,即使最后是失败的(应答RST)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

# man bcc-tcpconnlat

NAME

tcpconnlat - Trace TCP active connection latency. Uses Linux eBPF/bcc.

SYNOPSIS

tcpconnlat [-h] [-t] [-p PID] [-L] [-v] [min_ms]

DESCRIPTION

This tool traces active TCP connections (eg, via a connect() syscall), and shows the latency (time) for the connection as measured locally: the

time from SYN sent to the response packet. This is a useful performance metric that typically spans kernel TCP/IP processing and the network round

trip time (not application runtime).

All connection attempts are traced, even if they ultimately fail (RST packet in response).

This tool works by use of kernel dynamic tracing of TCP/IP functions, and will need updating to match any changes to these functions. This tool

should be updated in the future to use static tracepoints, once they are available.

1

2

3

4

5

6

7

8

# -h

examples:

./tcpconnlat # trace all TCP connect()s

./tcpconnlat 1 # trace connection latency slower than 1 ms

./tcpconnlat 0.1 # trace connection latency slower than 100 us

./tcpconnlat -t # include timestamps

./tcpconnlat -p 181 # only trace PID 181

./tcpconnlat -L # include LPORT while printing outputs

示例:python -m http.server起8000端口服务,(本机)curl ip:8000请求;

并发起一个不存在端口的请求,curl ip:12345

1

2

3

4

5

6

# -L 同时展示本端端口,默认不会展示

# 追踪的是主动发起连接的记录

[root@anonymous ➜ /usr/share/bcc/tools ]$ ./tcpconnlat -L

PID COMM IP SADDR LPORT DADDR DPORT LAT(ms)

12326 curl 4 192.168.1.150 57406 192.168.1.150 8000 0.09

12331 curl 4 192.168.1.150 51656 192.168.1.150 12345 0.08

3.8. tcpretrans:重传的TCP连接跟踪

动态追踪内核中的 tcp_retransmit_skb() 和 tcp_send_loss_probe()函数 (可能更新以匹配不同内核版本)

1

2

3

4

5

6

7

8

9

10

11

# man bcc-tcpretrans

DESCRIPTION

This traces TCP retransmits, showing address, port, and TCP state information, and sometimes the PID (although usually not, since retransmits are

usually sent by the kernel on timeouts). To keep overhead very low, only the TCP retransmit functions are traced. This does not trace every packet

(like tcpdump(8) or a packet sniffer). Optionally, it can count retransmits over a user signalled interval to spot potentially dropping network

paths the flows are traversing.

# 可以查看内核符号中是否匹配:

# grep -wE "tcp_retransmit_skb|tcp_send_loss_probe" /proc/kallsyms

This uses dynamic tracing of the kernel tcp_retransmit_skb() and tcp_send_loss_probe() functions, and will need to be updated to match kernel

changes to these functions.

1

2

3

4

5

6

7

8

9

10

11

12

usage: tcpretrans [-h] [-l] [-c]

optional arguments:

-h, --help show this help message and exit

-l, --lossprobe include tail loss probe attempts

-c, --count count occurred retransmits per flow

examples:

./tcpretrans # trace TCP retransmits

# TLP:tail loss probe,TCP发送端用于处理尾包丢失场景的算法

# 目的是使用快速重传取代RTO(重传超时)超时重传来处理尾包丢失,以减少因尾包丢失带来的延迟,提高TCP性能。

./tcpretrans -l # include TLP attempts

示例:下面通过构造丢包场景来进行实验

3.8.1. tc模拟

1、先安装tc:yum install iproute-tc,并构造丢包(实验环境网卡为enp4s0)

查看qdisc设置:tc qdisc show dev enp4s0

2、添加队列规则:tc qdisc add dev enp4s0 root netem loss 10%

报错了:”Error: Specified qdisc not found.”

查看没有sch_netem模块,modprobe加载,报错

1

2

3

4

[root@anonymous ➜ /root ]$ lsmod | grep netem

[root@anonymous ➜ /root ]$ modprobe sch_netem

modprobe: FATAL: Module sch_netem not found in directory /lib/modules/4.18.0-348.el8.x86_64

[root@anonymous ➜ /root ]$

需安装kernel-modules-extra,而后再modprobe sch_netem

参考解决方式:RTNETLINK answers: No such file or directory¶

但是安装上述内核包时提示/boot空间不够,尝试扩容失败了,最后重装系统使用tc使用正常。(踩坑过程:记一次失败的/boot分区扩容)

尝试在本机大量请求:ab -n 10000 -c 2 http://192.168.1.150:8000/ (python -m http.server先起服务),但是没抓到重传包。

抓到的都是ssh的重传,指定本地ip进行请求时应该走了lo回环。

1

2

3

4

[root@desktop-mme7h3a ➜ /root ]$ tc qdisc add dev enp4s0 root netem loss 30%

[root@desktop-mme7h3a ➜ /root ]$ tc qdisc show

qdisc noqueue 0: dev lo root refcnt 2

qdisc netem 8001: dev enp4s0 root refcnt 2 limit 1000 loss 30%

恢复上面的enp4s0丢包,设置lo丢包:

1

2

3

4

[root@desktop-mme7h3a ➜ /root ]$ tc qdisc del dev enp4s0 root netem loss 30%

[root@desktop-mme7h3a ➜ /root ]$ tc qdisc add dev lo root netem loss 20%

[root@desktop-mme7h3a ➜ /root ]$ tc qdisc show

qdisc netem 8003: dev lo root refcnt 2 limit 1000 loss 20%

本机上进行请求:ab -n 5 -c 1 http://192.168.1.150:8000/

果然可抓到下述重传包了:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

[root@localhost.localdomain ➜ /usr/share/bcc/tools ]$ ./tcpretrans

Tracing retransmits ... Hit Ctrl-C to end

TIME PID IP LADDR:LPORT T> RADDR:RPORT STATE

15:41:10 13096 4 192.168.1.150:8000 R> 192.168.1.150:50390 FIN_WAIT1

15:41:10 0 4 192.168.1.150:8000 R> 192.168.1.150:50390 FIN_WAIT1

15:41:10 0 4 192.168.1.150:8000 R> 192.168.1.150:50392 FIN_WAIT1

15:41:11 0 4 192.168.1.150:8000 R> 192.168.1.150:50392 FIN_WAIT1

15:41:11 13096 4 192.168.1.150:8000 R> 192.168.1.150:50394 FIN_WAIT1

15:41:11 0 4 192.168.1.150:22 R> 192.168.1.3:28309 ESTABLISHED

15:41:12 0 4 192.168.1.150:50396 R> 192.168.1.150:8000 SYN_SENT

15:41:12 0 4 192.168.1.150:8000 R> 192.168.1.150:50396 FIN_WAIT1

15:41:13 0 4 192.168.1.150:50400 R> 192.168.1.150:8000 SYN_SENT

15:41:13 0 4 192.168.1.150:22 R> 192.168.1.3:28386 ESTABLISHED

15:41:13 0 4 192.168.1.150:22 R> 192.168.1.3:28309 ESTABLISHED

15:41:13 0 4 192.168.1.150:50400 R> 192.168.1.150:8000 LAST_ACK

15:41:13 0 4 192.168.1.150:8000 R> 192.168.1.150:50400 FIN_WAIT1

15:41:13 0 4 192.168.1.150:50400 R> 192.168.1.150:8000 LAST_ACK

15:41:13 0 4 192.168.1.150:50400 R> 192.168.1.150:8000 LAST_ACK

15:41:14 0 4 192.168.1.150:50396 R> 192.168.1.150:8000 LAST_ACK

也可用下节的iptables模拟丢包。

3.8.2. iptables模拟

- Linux服务端:

iptables -A INPUT -m statistic --mode random --probability 0.2 -j DROP - Linux服务端:

python -m http.server起8000端口服务,并开启./tcpretrans - (本机)

wget 192.168.1.150:8000/tmpstrace请求 (tmpstrace是一个2M左右的文件) iptables -F恢复环境(原来就没有防火墙规则,最好单条规则删除)

出现了重传,结果如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

[root@anonymous ➜ /usr/share/bcc/tools ]$ ./tcpretrans

Tracing retransmits ... Hit Ctrl-C to end

TIME PID IP LADDR:LPORT T> RADDR:RPORT STATE

16:48:23 0 4 192.168.1.150:57870 R> 192.168.1.150:8000 ESTABLISHED

16:48:23 5110 4 192.168.1.150:8000 R> 192.168.1.150:57870 ESTABLISHED

16:48:23 0 4 192.168.1.150:8000 R> 192.168.1.150:57870 FIN_WAIT1

16:48:23 0 4 192.168.1.150:8000 R> 192.168.1.150:57870 FIN_WAIT1

16:48:23 0 4 192.168.1.150:8000 R> 192.168.1.150:57870 FIN_WAIT1

16:48:23 0 4 192.168.1.150:8000 R> 192.168.1.150:57870 FIN_WAIT1

16:48:23 0 4 192.168.1.150:8000 R> 192.168.1.150:57870 FIN_WAIT1

16:48:23 0 4 192.168.1.150:8000 R> 192.168.1.150:57870 FIN_WAIT1

16:48:23 0 4 192.168.1.150:8000 R> 192.168.1.150:57870 FIN_WAIT1

16:48:24 0 4 192.168.1.150:8000 R> 192.168.1.150:57870 FIN_WAIT1

16:48:24 0 4 192.168.1.150:8000 R> 192.168.1.150:57870 FIN_WAIT1

16:48:24 0 4 192.168.1.150:8000 R> 192.168.1.150:57870 FIN_WAIT1

16:48:24 0 4 192.168.1.150:8000 R> 192.168.1.150:57870 FIN_WAIT1

16:48:24 0 4 192.168.1.150:8000 R> 192.168.1.150:57870 FIN_WAIT1

16:48:24 0 4 192.168.1.150:57870 R> 192.168.1.150:8000 LAST_ACK

每次TCP重传数据包时,tcpretrans会打印一行记录,包含源地址和目的地址,以及当时该 TCP 连接所处的内核状态。TCP 重传会导致延迟和吞吐量方面的问题。

重传通常是网络健康状况不佳的标志,这个工具对它们的调查很有用。与使用tcpdump不同,该工具的开销非常低,因为它只跟踪重传函数。

T列:内核可能发送了一个TLP,但在某些情况下它可能最终没有被发送。

L>: 表示数据包是从本地地址(LADDR)发送到远程地址(RADDR)的。R>: 表示数据包是从远程地址(RADDR)发送到本地地址(LADDR)的。

3.9. tcpsubnet:统计发送到特定子网的TCP流量

tcpsubnet工具汇总并合计了本地主机发往子网的 IPv4 TCP 流量,并按固定间隔显示输出。

该工具使用 eBPF 功能来收集并总结数据,以减少开销。

1

2

3

4

5

6

7

8

examples:

./tcpsubnet # Trace TCP sent to the default subnets:

# 127.0.0.1/32,10.0.0.0/8,172.16.0.0/12,

# 192.168.0.0/16,0.0.0.0/0

./tcpsubnet -f K # Trace TCP sent to the default subnets

# aggregated in KBytes.

./tcpsubnet 10.80.0.0/24 # Trace TCP sent to 10.80.0.0/24 only

./tcpsubnet -J # Format the output in JSON.

示例:

1

2

3

4

5

6

7

8

9

10

[root@desktop-mme7h3a ➜ /usr/share/bcc/tools ]$ ./tcpsubnet

Tracing... Output every 1 secs. Hit Ctrl-C to end

[06/13/24 14:33:36]

192.168.0.0/16 224

[06/13/24 14:33:37]

192.168.0.0/16 200

[06/13/24 14:33:38]

192.168.0.0/16 200

[06/13/24 14:33:39]

192.168.0.0/16 224

3.10. tcpdrop:被内核丢弃的TCP数据包跟踪

每次内核丢弃 TCP 数据包和段时,tcpdrop 都会显示连接的详情,包括导致软件包丢弃的内核堆栈追踪。

追踪的是tcp_drop()内核函数

示例: python -m http.server起8000端口服务,用ab工具(yum install httpd-tools)压测

ab -n 100 -c 6 http://192.168.1.150:8000/,6并发请求100次,注意url后面的/不能少

监测结果:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

[root@desktop-mme7h3a ➜ /usr/share/bcc/tools ]$ ./tcpdrop

TIME PID IP SADDR:SPORT > DADDR:DPORT STATE (FLAGS)

15:11:36 9433 4 192.168.1.150:8000 > 192.168.1.150:33166 CLOSE (ACK)

b'tcp_drop+0x1'

b'tcp_rcv_state_process+0xb2'

b'tcp_v4_do_rcv+0xb4'

b'__release_sock+0x7c'

b'__tcp_close+0x180'

b'tcp_close+0x1f'

b'inet_release+0x42'

b'__sock_release+0x3d'

b'sock_close+0x11'

b'__fput+0xbe'

b'task_work_run+0x8a'

b'exit_to_usermode_loop+0xeb'

b'do_syscall_64+0x198'

b'entry_SYSCALL_64_after_hwframe+0x65'

15:11:36 9433 4 192.168.1.150:8000 > 192.168.1.150:33168 CLOSE (ACK)

b'tcp_drop+0x1'

b'tcp_rcv_state_process+0xb2'

b'tcp_v4_do_rcv+0xb4'

b'__release_sock+0x7c'

b'__tcp_close+0x180'

b'tcp_close+0x1f'

b'inet_release+0x42'

b'__sock_release+0x3d'

b'sock_close+0x11'

b'__fput+0xbe'

b'task_work_run+0x8a'

b'exit_to_usermode_loop+0xeb'

b'do_syscall_64+0x198'

b'entry_SYSCALL_64_after_hwframe+0x65'

STATE (FLAGS):TCP 连接的状态和相关的 TCP 标志:

CLOSE_WAIT (FIN|ACK)CLOSE_WAIT表示本地应用程序已经接收了关闭连接的 FIN 包,但还没有发送它自己的 FIN 包来关闭连接。FIN|ACK标志 表示这个数据包是一个带有 FIN 和 ACK 标志的 TCP 段。这通常是在关闭连接的过程中发送的。

CLOSE (ACK)CLOSE状态 表示连接正在关闭,但还没有完全关闭。ACK标志 表示这个数据包是一个TCP确认包,用于确认之前接收到的数据包。

3.11. tcpstates:显示TCP状态更改信息

跟踪TCP状态变化,并打印每个状态的持续时间。

每次连接改变其状态时,tcpstates都会显示一个新行,其中包含更新的连接详情。

追踪的是tracepoint:sock:inet_sock_set_state

1

2

3

4

5

6

7

8

DESCRIPTION

This tool traces TCP session state changes while tracing, and prints details including the duration in each state. This can help explain the

latency of TCP connections: whether the time is spent in the ESTABLISHED state (data transfer), or initialization state (SYN_SENT), etc.

This tool works using the sock:inet_sock_set_state tracepoint, which was added to Linux 4.16. Linux 4.16 also included extra state transitions so

that all TCP transitions could be observed by this tracepoint.

Only TCP state changes are traced, so it is expected that the overhead of this tool is much lower than typical send/receive tracing.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

usage: tcpstates [-h] [-T] [-t] [-w] [-s] [-L LOCALPORT] [-D REMOTEPORT] [-Y]

Trace TCP session state changes and durations

examples:

./tcpstates # trace all TCP state changes

./tcpstates -t # include timestamp column

./tcpstates -T # include time column (HH:MM:SS)

./tcpstates -w # wider columns (fit IPv6)

./tcpstates -stT # csv output, with times & timestamps

./tcpstates -Y # log events to the systemd journal

./tcpstates -L 80 # only trace local port 80

./tcpstates -L 80,81 # only trace local ports 80 and 81

./tcpstates -D 80 # only trace remote port 80

示例:python -m http.server起8000端口服务,(本机)curl ip:8000请求,跟踪8000端口

1

2

3

4

5

6

7

8

[root@desktop-mme7h3a ➜ /usr/share/bcc/tools ]$ ./tcpstates -L 8000

SKADDR C-PID C-COMM LADDR LPORT RADDR RPORT OLDSTATE -> NEWSTATE MS

ffff9e64e0769380 8531 curl 0.0.0.0 8000 0.0.0.0 0 LISTEN -> SYN_RECV 0.000

ffff9e64e0769380 8531 curl 192.168.1.150 8000 192.168.1.150 32894 SYN_RECV -> ESTABLISHED 0.004

ffff9e64e0769380 8463 python3 192.168.1.150 8000 192.168.1.150 32894 ESTABLISHED -> FIN_WAIT1 1.303

ffff9e64e0769380 8463 python3 192.168.1.150 8000 192.168.1.150 32894 FIN_WAIT1 -> FIN_WAIT1 0.008

ffff9e64e0769380 8531 curl 192.168.1.150 8000 192.168.1.150 32894 FIN_WAIT1 -> CLOSING 0.010

ffff9e64e0769380 8531 curl 192.168.1.150 8000 192.168.1.150 32894 CLOSING -> CLOSE 0.012

3.12. tcprtt:TCP连接往返时间跟踪

tcprtt可以监控TCP连接(已建立连接的socket)的往返时间,从而评估网络质量,帮助用户找出可能的问题所在。可以打印直方图形式的时间分布。

1

2

3

DESCRIPTION

This tool traces established connections RTT(round-trip time) to analyze the quality of network. This can be useful for general troubleshooting to

distinguish the network latency is from user process or physical network.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

usage: tcprtt [-h] [-i INTERVAL] [-d DURATION] [-T] [-m] [-p LPORT] [-P RPORT]

[-a LADDR] [-A RADDR] [-b] [-B] [-D]

Summarize TCP RTT as a histogram

examples:

./tcprtt # summarize TCP RTT

./tcprtt -i 1 -d 10 # print 1 second summaries, 10 times

./tcprtt -m -T # summarize in millisecond, and timestamps

./tcprtt -p # filter for local port

-p LPORT, --lport LPORT

./tcprtt -P # filter for remote port

-P RPORT, --rport RPORT

./tcprtt -a # filter for local address

-a LADDR, --laddr LADDR

./tcprtt -A # filter for remote address

-A RADDR, --raddr RADDR

./tcprtt -b # show sockets histogram by local address

-b, --byladdr

./tcprtt -B # show sockets histogram by remote address

-B, --byraddr

./tcprtt -D # show debug bpf text

-D, --debug

示例1:总体RTT分布

1

2

3

4

5

6

7

8

9

10

11

12

[root@desktop-mme7h3a ➜ /usr/share/bcc/tools ]$ ./tcprtt -i 1 -d 10 -m

Tracing TCP RTT... Hit Ctrl-C to end.

msecs : count distribution

0 -> 1 : 1 |****************************************|

msecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 1 |****************************************|

8 -> 15 : 0 | |

16 -> 31 : 1 |****************************************|

示例2:按远端IP展示

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

[root@desktop-mme7h3a ➜ /usr/share/bcc/tools ]$ ./tcprtt -i 3 -m --lport 8000 --byraddr

Tracing TCP RTT... Hit Ctrl-C to end.

Remote Address: = b'192.168.1.150'

msecs : count distribution

0 -> 1 : 3 |****************************************|

Remote Address: = b'192.168.1.150'

msecs : count distribution

0 -> 1 : 300 |****************************************|

Remote Address: = b'192.168.1.150'

msecs : count distribution

0 -> 1 : 3 |****************************************|

Remote Address: = b'192.168.1.150'

msecs : count distribution

0 -> 1 : 3 |****************************************|

3.13. tcpsynbl:SYN backlog 队列长度跟踪

跟踪统计SYN队列的长度,并根据最大限制数量分类展示,多个最大数量相同的端口归集到一个(看下面的示例结果,这里表示的应该是全连接队列)

跟踪的内核函数是tcp_v4_syn_recv_sock()/tcp_v6_syn_recv_sock()

1

2

3

4

5

6

7

DESCRIPTION

This tool shows the TCP SYN backlog size during SYN arrival as a histogram. This lets you see how close your applications are to hitting the back-

log limit and dropping SYNs (causing performance issues with SYN retransmits), and is a measure of workload saturation. The histogram shown is mea-

sured at the time of SYN received, and a separate histogram is shown for each backlog limit.

This works by tracing the tcp_v4_syn_recv_sock() and tcp_v6_syn_recv_sock() kernel functions using dynamic instrumentation. Since these functions

may change in future kernels, this tool may need maintenance to keep working.

示例:

- 跟踪一段时间

Ctrl-C结束,查看统计结果 - 期间通过

python -m http.server起8000服务,ab并发请求:ab -n 100 -c 6 http://192.168.1.150:8000/ - 并测试2次:

curl 192.168.1.150:22

结果如下:可看到http服务的全连接队列最大长度是5,追踪到全部100个请求;22端口最大全连接队列长度128,也追踪到了这2次请求

1

2

3

4

5

6

7

8

9

10

11

12

13

[root@localhost.localdomain ➜ /usr/share/bcc/tools ]$ ./tcpsynbl

Tracing SYN backlog size. Ctrl-C to end.

^C

backlog_max = 5

backlog : count distribution

0 -> 1 : 4 |* |

2 -> 3 : 2 | |

4 -> 7 : 94 |****************************************|

backlog_max = 128

backlog : count distribution

0 -> 1 : 2 |****************************************|

1

2

3

4

5

6

7

8

9

[root@localhost.localdomain ➜ /usr/share/bcc/tools ]$ ss -ltn

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 5 0.0.0.0:8000 0.0.0.0:*

LISTEN 0 5 127.0.0.1:44321 0.0.0.0:*

LISTEN 0 5 127.0.0.1:4330 0.0.0.0:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 5 [::1]:44321 [::]:*

LISTEN 0 5 [::1]:4330 [::]:*

3.14. solisten:listen操作跟踪

所有的listen操作都被跟踪,即便最后失败也一样

1

2

3

4

5

6

7

8

9

NAME

solisten - Trace listening socket

SYNOPSIS

usage: solisten [-h] [--show-netns] [-p PID] [-n NETNS]

DESCRIPTION

All IPv4 and IPv6 listen attempts are traced, even if they ultimately fail or the listening program is

not willing to accept().

1

2

3

4

5

6

7

8

9

usage: solisten [-h] [--show-netns] [-p PID] [-n NETNS]

Stream sockets listen

Examples:

./solisten # Stream socket listen

./solisten -p 1234 # Stream socket listen for specified PID only

./solisten --netns 4242 # " for the specified network namespace ID only

./solisten --show-netns # Show network ns ID (useful for containers)

示例:分别用不同端口起监听服务

python -m http.serverpython -m http.server 8001nc -l 8080

可看到上述监听尝试都追踪到了,并会展示IPV4/IPV6和对应的全连接队列长度限制

1

2

3

4

5

6

[root@localhost.localdomain ➜ /usr/share/bcc/tools ]$ ./solisten

PID COMM PROTO BACKLOG PORT ADDR

11271 python TCPv4 5 8000 0.0.0.0

11292 python TCPv4 5 8001 0.0.0.0

11316 nc TCPv6 10 8080 ::

11316 nc TCPv4 10 8080 0.0.0.0

3.15. netqtop:网口发送/接收队列数据包跟踪

跟踪指定网口的发送队列和接收队列的数据包,结果按数据包大小分类并统计每类的包个数,可以基于此检查网络负载是否平衡。

1

2

3

4

5

6

7

usage: netqtop [-h] [--name NAME] [--interval INTERVAL] [--throughput]

optional arguments:

-h, --help show this help message and exit

--name NAME, -n NAME

--interval INTERVAL, -i INTERVAL

--throughput, -t

tracepoints:net:net_dev_start_xmit 和 net:netif_receive_skb

示例:指定网口,间隔3s统计,并打印对应的BPS(Bits Per Second)和PPS(Packets Per Second)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

[root@localhost.localdomain ➜ /usr/share/bcc/tools ]$ ./netqtop -n enp4s0 -i 3 -t

Fri Jun 14 14:19:15 2024

TX

QueueID avg_size [0, 64) [64, 512) [512, 2K) [2K, 16K) [16K, 64K) BPS PPS

0 90.0 1 2 0 0 0 90.0 1.0

Total 90.0 1 2 0 0 0 90.0 1.0

RX

QueueID avg_size [0, 64) [64, 512) [512, 2K) [2K, 16K) [16K, 64K) BPS PPS

0 58.8 4 1 0 0 0 98.0 1.67

Total 58.8 4 1 0 0 0 98.0 1.67

-----------------------------------------------------------------------------------------------

Fri Jun 14 14:19:18 2024

TX

QueueID avg_size [0, 64) [64, 512) [512, 2K) [2K, 16K) [16K, 64K) BPS PPS

0 243.33 0 6 0 0 0 486.67 2.0

Total 244.4 0 5 0 0 0 407.33 1.67

RX

QueueID avg_size [0, 64) [64, 512) [512, 2K) [2K, 16K) [16K, 64K) BPS PPS

0 52.0 3 1 0 0 0 69.33 1.33

Total 52.0 3 1 0 0 0 69.33 1.33

-----------------------------------------------------------------------------------------------

3.16. softirqs 软中断时间统计

跟踪统计各个软中断事件的汇总时间。

追踪tracepoint点:irq:softirq_enter 和 irq:softirq_exit

1

2

3

4

5

6

7

8

9

usage: softirqs [-h] [-T] [-N] [-d] [interval] [count]

Summarize soft irq event time as histograms.

examples:

./softirqs # sum soft irq event time

./softirqs -d # show soft irq event time as histograms

./softirqs 1 10 # print 1 second summaries, 10 times

./softirqs -NT 1 # 1s summaries, nanoseconds, and timestamps

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

[root@localhost.localdomain ➜ /usr/share/bcc/tools ]$ ./softirqs 3

Tracing soft irq event time... Hit Ctrl-C to end.

SOFTIRQ TOTAL_usecs

net_tx 1

rcu 25

sched 486

timer 996

net_rx 1058

SOFTIRQ TOTAL_usecs

net_rx 159

rcu 433

sched 798

timer 1255

-d展示直方图形式

1

2

3

4

5

6

7

8

9

10

11

12

[root@localhost.localdomain ➜ /usr/share/bcc/tools ]$ ./softirqs -d 3

Tracing soft irq event time... Hit Ctrl-C to end.

...

softirq = net_rx

usecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 1 |******************** |

4 -> 7 : 0 | |

8 -> 15 : 1 |******************** |

16 -> 31 : 0 | |

32 -> 63 : 2 |****************************************|

...

4. TODO问题定位(乌龙)

上面的实验过程的疑问,本小节进行定位

另外一个案例待定位(TODO):主机1(MAC电脑,192.168.1.2) ssh到 主机2(Linux PC机,即上面的192.168.1.150),

python -m http.server起服务都是在Linux操作的,但是从MAC上curl 192.168.1.150:8000不通。互相ping是通的。

1、服务端(192.168.1.150)起服务;

两端都开启8000端口抓包,并在服务端追踪tcpdrop(丢包)和./tcpstates -L 8000(8000端口的socket)

2、客户端(192.168.1.2)发起curl请求

3、观察结果如下,客户端和服务端都抓到唯一的SYN包;且用上述tcpdrop和./tcpstates均未抓到任何记录

1

2

3

4

5

6

# 客户端

➜ /Users/xd/Documents/workspace ✗ tcpdump -i en0 port 8000 -nn

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on en0, link-type EN10MB (Ethernet), capture size 262144 bytes

15:49:30.403293 IP 192.168.1.2.60055 > 192.168.1.150.8000: Flags [S], seq 78903990, win 65535, options [mss 1460,nop,wscale 6,nop,nop,TS val 1880147800 ecr 0,sackOK,eol], length 0

1

2

3

4

5

6

7

# 服务端

[root@xdlinux ➜ /root ]$ tcpdump -i any port 8000 -nn

dropped privs to tcpdump

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on any, link-type LINUX_SLL (Linux cooked v1), capture size 262144 bytes

07:49:29.461703 IP 192.168.1.2.60055 > 192.168.1.150.8000: Flags [S], seq 78903990, win 65535, options [mss 1460,nop,wscale 6,nop,nop,TS val 1880147800 ecr 0,sackOK,eol], length 0

4、分析:服务端收到SYN,但是没应答SYN+ACK

再次检查防火墙,关闭后重试(iptables -F),成功了。。。明明记得之前应该是试过关防火墙的,当时还是不通。搞了个乌龙。

先把客户端IP加到防火墙白名单。CentOS7中用iptables服务管理防火墙,CentOS8里默认用firewalld服务,通过firewall-cmd命令。

失败的设置:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

[root@xdlinux ➜ /home/workspace/ ]$ firewall-cmd --permanent --add-source=192.168.1.0/24

[root@xdlinux ➜ /home/workspace/ ]$ firewall-cmd --reload

[root@xdlinux ➜ /home/workspace/ ]$ iptables -nL|grep 192

IN_public all -- 192.168.1.0/24 0.0.0.0/0 [goto]

FWDI_public all -- 192.168.1.0/24 0.0.0.0/0 [goto]

FWDO_public all -- 0.0.0.0/0 192.168.1.0/24 [goto]

[root@xdlinux ➜ /home/workspace/ ]$ firewall-cmd --list-all

public (active)

target: default

icmp-block-inversion: no

interfaces: enp4s0

sources: 192.168.1.0/24

services: cockpit dhcpv6-client ssh

ports:

protocols:

forward: no

masquerade: no

forward-ports:

source-ports:

icmp-blocks:

rich rules:

把192.168.1.0/24都加到白名单,设置后客户端还是curl不通

成功的设置:

问GPT,需要在public区域(zone)设置端口白名单活着设置富规则(rich rules)。下述设置富规则后,客户端连接正常,tcpstates也能追踪到了。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

[root@xdlinux ➜ /home/workspace/ ]$ firewall-cmd --permanent --zone=public --add-rich-rule='rule family="ipv4" source address="192.168.1.0/24" accept'

[root@xdlinux ➜ /home/workspace/ ]$ firewall-cmd --reload

[root@xdlinux ➜ /home/workspace/ ]$ firewall-cmd --list-all

public (active)

target: default

icmp-block-inversion: no

interfaces: enp4s0

sources: 192.168.1.0/24 192.168.1.2

services: cockpit dhcpv6-client ssh

ports:

protocols:

forward: no

masquerade: no

forward-ports:

source-ports:

icmp-blocks:

rich rules:

rule family="ipv4" source address="192.168.1.0/24" accept

1

2

3

4

5

6

7

8

[root@xdlinux ➜ /usr/share/bcc/tools ]$ ./tcpstates -L 8000

SKADDR C-PID C-COMM LADDR LPORT RADDR RPORT OLDSTATE -> NEWSTATE MS

ffff9f20324930c0 0 swapper/9 0.0.0.0 8000 0.0.0.0 0 LISTEN -> SYN_RECV 0.000

ffff9f20324930c0 0 swapper/9 192.168.1.150 8000 192.168.1.2 61537 SYN_RECV -> ESTABLISHED 0.016

ffff9f20324930c0 8111 python 192.168.1.150 8000 192.168.1.2 61537 ESTABLISHED -> FIN_WAIT1 2.280

ffff9f20324930c0 8111 python 192.168.1.150 8000 192.168.1.2 61537 FIN_WAIT1 -> FIN_WAIT1 0.036

ffff9f20324930c0 0 swapper/9 192.168.1.150 8000 192.168.1.2 61537 FIN_WAIT1 -> FIN_WAIT2 1.381

ffff9f20324930c0 0 swapper/9 192.168.1.150 8000 192.168.1.2 61537 FIN_WAIT2 -> CLOSE 0.006

具体IP层的防火墙过滤逻辑,后面文章再分析整理。

5. 小结

学习bcc tools工具集中网络相关的部分工具,并在环境中实践工具效果。

6. 参考

2、TCP 连接排故:使用 BPF BCC工具包进行网络跟踪

3、GPT